Nederlab: Concerns from the Research Perspective

Nederlab is a recently awarded Dutch Science Foundation large infrastructure investment. The successful proposal for a 2.4MEuro subsidy was carried by an impressive consortium of leading researchers in the fields of linguistics, history, and literary scholarship. Reading the proposal I find there are several serious issues that may cripple the project from the start. I think both researchers and technicians involved should tackle these issues rather now than later.

Nederlab according to the proposal should be a “laboratory for research on the patterns of change in the Dutch language and culture” (Nederlab 2011, p.2). “Scholars in the humanities – linguists, literary scholars, historians – try to understand processes of change and variation […]. To study these processes, large quantities of data are needed […].” The goal of Nederlab is “to enable scholars in the humanities to find answers to new, longitudinal research questions.” “Until now […] research has of necessity mainly consisted of case studies detailing comparatively brief periods of time. As more historical texts become available in digital form, new longitudinal research questions emerge, and systematic research on the interaction of changes in culture, society, literature and language can be mooted.” Let’s recap this in a bulleted list. Nederlab…

- is for linguists, literary scholars, and historians

- is meant to support longitudinal humanities research

- uses detection of patterns in language and culture

- relies on the historical texts that have become available

The subsidy application continues: “From an international perspective, scholars of Dutch history, literature and language are in an excellent position to develop new perspectives on topics as the ones described above. The Netherlands is a central player in the international eHumanities debate, in particular with respect to infrastructure and research tools (cf. CLARIN and DARIAH).”

So we add to our list of Nederlab characteristics:

- relies on CLARIN and DARIAH related infrastructure and tools

The main body of the plan describes research cases and questions that could be answered given a historical corpus spanning 10 centuries. Most of the use cases focus on post 18th century period. Most are linguistics oriented, or use linguistic parsing as a means to infer observations from digital data. So we again expand our list:

- focuses on post 1700 use cases / material

- relies on automated parsing and tagging for its research paradigm

The Nederlab application then also states a contingency strategy: “There is heterogeneity in the data: a large slice of the corpus is of poor quality since it consists of poor OCR. In compensation, a number of representative high-quality corpora will directly be incorporated as core corpora in the demonstrator. Data curation will gradually revalue the poor parts of the corpus, a necessary measure as weaker data is difficult to access by tools. The problem can be mitigated by designing the tools in such a way that they bypass the problems of weaker data, and by enabling researchers to compose subsets of the corpus from which weaker data are barred.” (Nederlab 2011, p.27) Thus we can also add to our list:

- uses a historical corpus that is for a large part of poor quality

- can not use automated tools to remedy this (or only in a very limited ability)

- will rely on manual curation to remedy this over time

- ignores poor quality data (“weaker data are barred”)

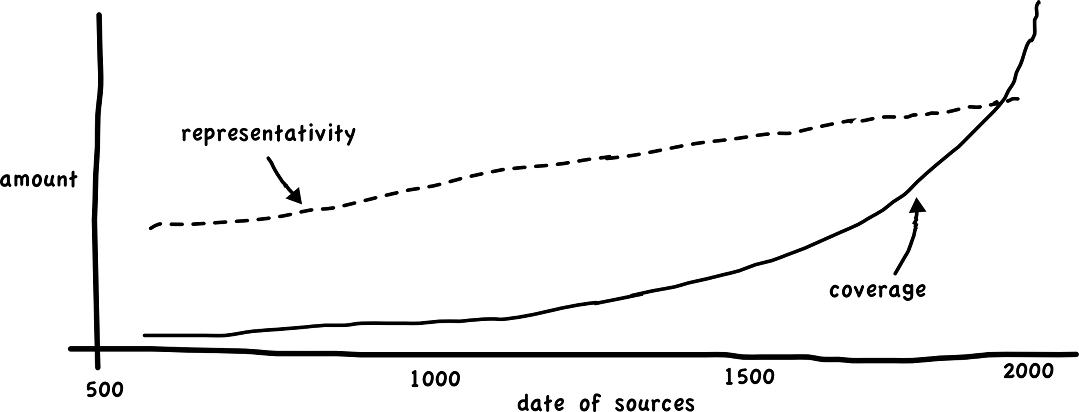

Now, let’s combine these characteristics with the knowledge we have from experience about the current state of digital affairs in humanities research from a longitudinal perspective. First, let’s take a look at how representative the sources are that we have. The farther we move back in time the less coverage we have (lot’s of sources got lost). The sources we do have are luckily somewhat representative (they are a good enough ‘sample’ of what went on), but also quality gets poorer and poorer the farther we move back in time. Simply put the availability of our material is skewed towards roughly post 1800 material. This means that in itself the research material available and thus potentially available for diachronic research is biased towards the post 1800AD period.

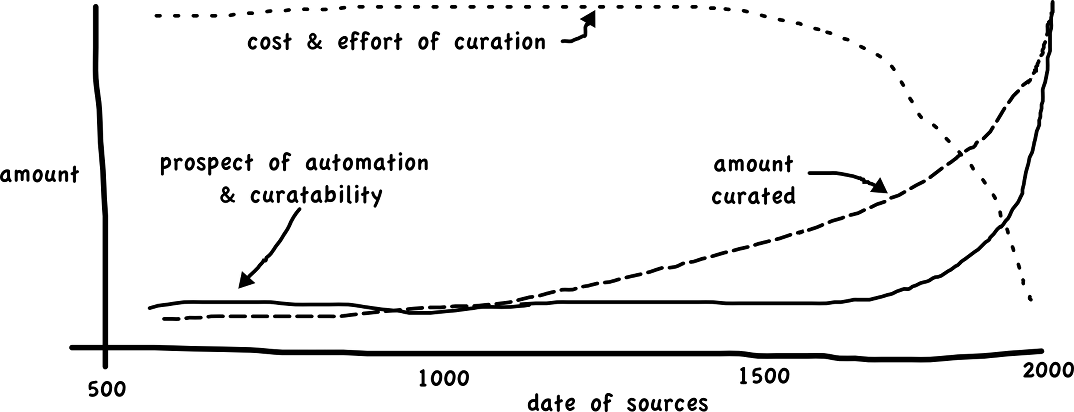

Now let’s look at the level of curation and curatability. The situation is here even more skewed. Automated tools for scanning, OCR-ing, and POS-tagging are only effective on more recent material. Roughly material before 1800 needs a costly and capacity expensive manual effort to be digitized. First because of the form, format, and language (spelling and orthography) of documents. Secondly because the form and amount of material does not allow for automatic parsing. Patterns of culture can not be detected along purely automated approach currently in older material. The common linguistics strategy to auto-tag, then reduce noise, and then infer patterns does not work here. Rather a manually annotate, curate noise into data, and infer strategy is needed; even if we want to apply the quicker automated strategy because for that we need training corpora… that need to be manually curated.

So the conclusion from a research perspective here must be that the material we have, or can fairly easily curate, is ‘favoring’ later period (> 1800) research. Let’s take a look at the composition of our research audience over the time period:

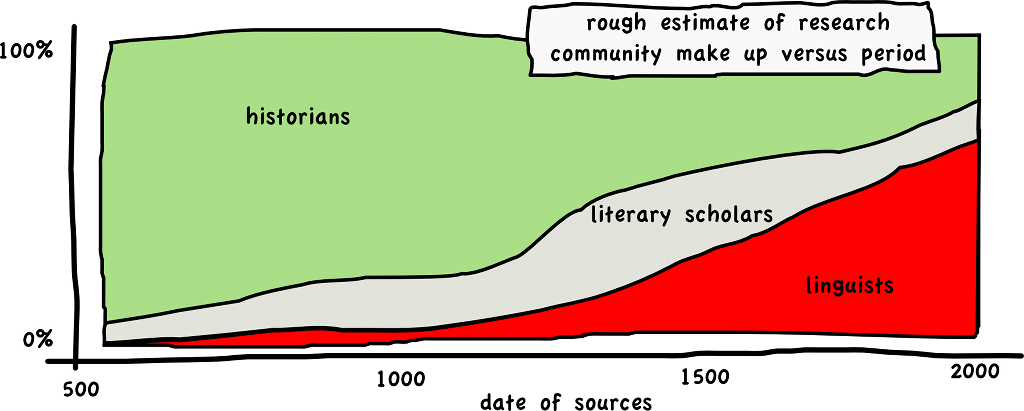

Historians are interested in (surprise?) history, linguists are predominantly interested in reasonably recent affairs of language –logically deriving from the fact that data must be readily available to do any meaningful empirical linguistic research. Literary scholars have a fairly evenly distributed interest through time, but are depending on available material. Which means they come only in ‘full swing’ after roughly 1200 AD. In any case, again the available material and period of study do seem to skew towards linguists.

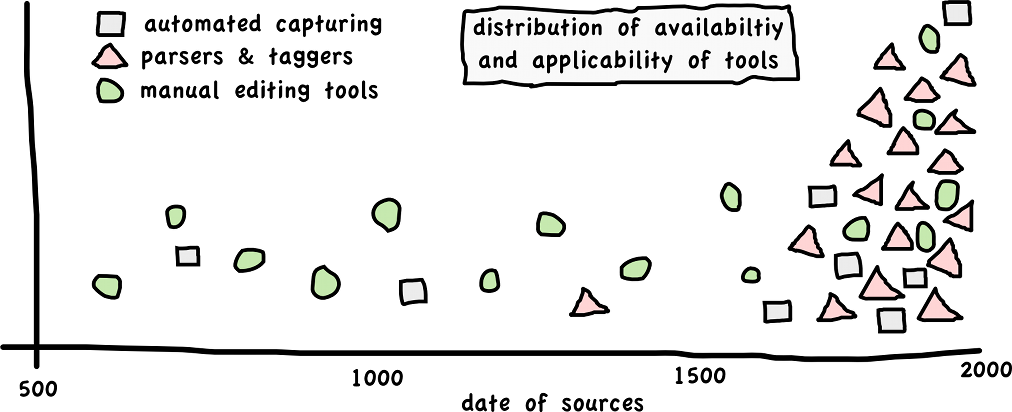

How is the tool situation? Given that commercially employed software engineers need to focus added economical value language tools and pattern recognition tools have been blue printed towards current era human language and culture artifacts (texts, audio, film etc.) Almost no editing, capturing, or annotation software is readily applicable or even available for older language periods. In part this is also the reason why the predominant ‘digital paradigm’ of humanities in the older language phases is still reliant on manual tag, link, and infer strategies: no serious effort in automated recognition, capturing or tagging older language have ever been undertaken. This means there’s plenty of automated taggers and parsers… for modern Dutch. For older Dutch there are at most some suitable transcription tools (for instance eLaborate). The tool categories listed (i.e. tokenization, spelling normalization, part of speech-tagging, lemmatization) (Nederlab 2011, p.7) are available only effectively for roughly post 1700AD material.

The paradigm matter is of particular interest here. The more statistics oriented paradigm of linguistics is facilitated because automatic formalization of modern language material is highly feasible. Only few doubt that such a paradigm will also be useful for the (pattern) analysis of historical material by historians and literary scholars. But we utterly lack the tools and the knowledge of the properties that should be formalized. Nederlab actually provides an excellent occasion to study this problem and make significant headway in these types of formalizations.

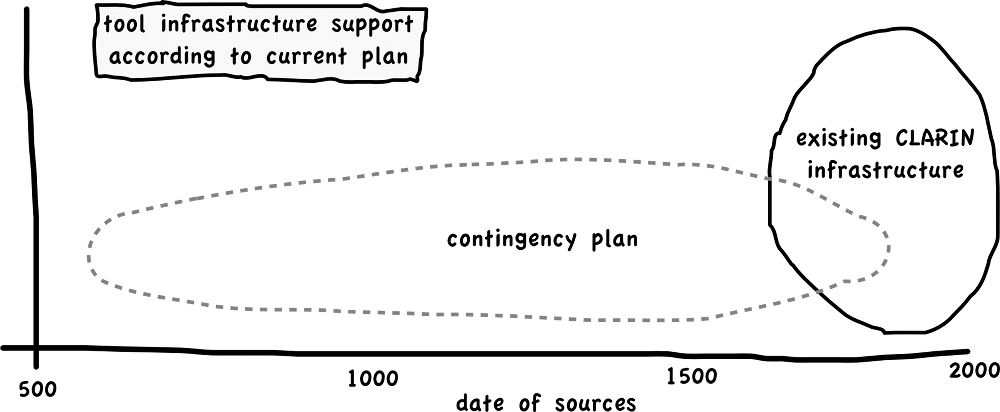

However, it will now be clear that as for research interest, available digital material, prospect of digitization, and availability and applicability of existing tools the situation and prospect is highly in favor of modern age linguistics research. The older phases have problems with the amount of available sources and data, the ability to digitize data because of the heterogeneity of form and format of sources, the ability to apply automated analysis against data, and the prospect of tools being fitted for such. In short: the problems and difficulties as for the technical solutions predominantly lie with the older language phases. This is where we need an extra effort to resolve the situation so that computational approaches can be applied and the full advantage of a diachronic corpus can be realized. However the contingency plan of the proposal rather suggest that we are to expect this:

The proposal states in no unclear words that the contingency plan is to ignore poor quality data. This strategy applied to about any data from before 1800 will result in no data or far too little data for any useful automated pattern analysis.

The major advantage, headway, and opportunity the Nederlab project could create is the ability to digitally manipulate and analyze a true diachronic corpus with appropriate tools. The way things are formulated now however, it rather looks like we’ll end up with yet again a 19/20th century focus linguistics’ sandbox. That would thus be a major missed opportunity. From the run up and preparation of the proposal I personally know that minds were alike and the all over intention indeed was to make a decisive push for longitudinal resources for longitudinal language and culture research. To make this happen we need to focus rather on the left side of the skraphs (< sketch-graphs) I drew here. It is pretty clear that the right side is already well supported. This is therefore a call to arms for all researchers and developers involved to put the focus where it ought to be and not on the low hanging fruits.

references

- (Nederlab 2011) Nederlab: Laboratory for research on the patterns of change in the Dutch language and culture. Application for Investment Subsidy NWO Large. Meertens Intistute, Amsterdam/The Hague, October 2011. http://www.nederlab.nl/docs/Nederlab_NWO_Groot_English_aanvraagformulier.pdf (accessed Sunday 14 October 2012).